AI Ethics Frameworks: US Businesses Must Implement by 2025

US businesses must implement robust AI ethics frameworks by 2025 to navigate evolving regulations and avoid significant penalties, ensuring responsible AI development and deployment for sustained growth and public trust.

The rapid advancement of artificial intelligence (AI) presents unprecedented opportunities for innovation and efficiency, yet it also introduces complex ethical dilemmas. For US businesses, the urgency to adopt and integrate robust US AI ethics frameworks has never been greater. With 2025 on the horizon, organizations face increasing pressure from regulators, consumers, and employees to ensure their AI systems are fair, transparent, and accountable. Failing to comply can result in severe penalties, reputational damage, and a loss of public trust. This article delves into the critical frameworks US businesses must implement to navigate this evolving landscape and thrive responsibly.

Understanding the Urgency of AI Ethics in the US Business Landscape

The imperative for US businesses to prioritize AI ethics stems from a confluence of factors, including emerging regulations, heightened public scrutiny, and the inherent risks associated with unchecked AI deployment. As AI systems become more sophisticated and integrated into daily operations, their potential for both positive and negative societal impact grows exponentially. Businesses that proactively address these ethical considerations position themselves as leaders in responsible innovation, fostering trust and mitigating future liabilities.

The regulatory environment is rapidly evolving, with various government agencies and legislative bodies at both federal and state levels exploring ways to govern AI. While a single, comprehensive federal law is still in development, sector-specific guidelines and state-level initiatives are already shaping the compliance landscape. This patchwork of regulations necessitates a proactive and adaptable approach from businesses to ensure they are not caught off guard by new mandates.

The Growing Regulatory Landscape

Several key governmental bodies are actively working to establish AI guidelines. The National Institute of Standards and Technology (NIST) AI Risk Management Framework, for instance, provides a voluntary yet influential guide for managing AI risks. Meanwhile, individual states are also stepping up, with California and New York leading the charge in proposing or enacting AI-related legislation. These initiatives often focus on protecting consumer rights, ensuring data privacy, and preventing algorithmic bias.

- Federal Initiatives: NIST AI RMF, White House Executive Orders on AI.

- State-Level Regulations: California Consumer Privacy Act (CCPA) extensions, New York City’s automated employment decision tool law.

- Sector-Specific Rules: FDA guidance for AI in medical devices, banking regulations addressing algorithmic fairness.

The financial and reputational stakes are significant. Non-compliance can lead to hefty fines, legal challenges, and a severe blow to a company’s brand image. Beyond legal ramifications, consumers are increasingly aware of AI’s ethical implications and are more likely to support businesses that demonstrate a clear commitment to responsible AI practices. Therefore, integrating AI ethics is not merely a compliance exercise but a strategic business imperative.

Framework 1: NIST AI Risk Management Framework (AI RMF)

The National Institute of Standards and Technology (NIST) has emerged as a crucial voice in guiding AI development in the US. Their AI Risk Management Framework (AI RMF) offers a comprehensive, voluntary set of guidelines designed to help organizations manage the risks associated with AI systems. While not legally binding, the AI RMF is widely considered a benchmark for best practices and is increasingly referenced by regulators and industry leaders.

The framework emphasizes a holistic approach to AI risk, covering the entire AI lifecycle from design and development to deployment and monitoring. It encourages organizations to integrate risk management processes into their existing governance structures, ensuring that ethical considerations are embedded from the outset rather than being an afterthought. This proactive stance helps businesses identify, measure, and mitigate potential harms before they manifest.

Core Components of the NIST AI RMF

The NIST AI RMF is structured around four core functions: Govern, Map, Measure, and Manage. Each function outlines specific activities and outcomes that contribute to a robust AI risk management strategy. Adhering to these components helps create a structured approach to addressing AI’s ethical challenges.

- Govern: Establish an organizational culture of risk management, outlining policies, roles, and responsibilities for AI systems.

- Map: Identify and characterize AI risks, including potential harms, vulnerabilities, and threats across the AI lifecycle.

- Measure: Evaluate AI risks, using quantitative and qualitative methods to assess the likelihood and impact of identified harms.

- Manage: Prioritize and implement risk responses, developing strategies to mitigate, transfer, avoid, or accept AI-related risks.

Implementing the NIST AI RMF requires a commitment to continuous improvement and adaptation. As AI technologies evolve, so too must the strategies for managing their risks. Businesses should view the AI RMF not as a static checklist, but as a dynamic tool for fostering responsible innovation and building public trust in their AI applications.

Framework 2: Principles of the OECD AI Recommendation

The Organisation for Economic Co-operation and Development (OECD) AI Recommendation, while an international framework, holds significant sway within the US due to its foundational principles being adopted and referenced by many national AI strategies. These principles provide a high-level ethical compass for the responsible stewardship of trustworthy AI, influencing policy discussions and best practices across various sectors.

The OECD’s principles are designed to promote AI that is innovative and trustworthy, while also respecting human rights and democratic values. For US businesses, aligning with these principles demonstrates a commitment to global best practices and can facilitate international collaborations, especially as global AI regulations begin to converge. They offer a universally recognized baseline for ethical AI development and deployment.

Key OECD AI Principles for Businesses

The OECD AI Recommendation outlines five complementary values-based principles and five policy recommendations. The values-based principles are particularly relevant for businesses seeking to embed ethics into their AI systems and operations, providing a clear ethical foundation.

- Inclusive Growth, Sustainable Development and Well-being: AI should benefit all people and the planet, fostering fair societies.

- Human-centered Values and Fairness: AI systems should respect human rights and fundamental freedoms, ensuring fairness and non-discrimination.

- Transparency and Explainability: AI systems should be transparent, allowing for understanding of their decision-making processes where appropriate.

- Robustness, Security and Safety: AI systems should be reliable, secure, and operate as intended, minimizing unintended consequences.

- Accountability: Organizations and individuals deploying AI should be accountable for its proper functioning and adherence to ethical principles.

Adopting the OECD principles goes beyond mere compliance; it’s about building a sustainable and ethical AI ecosystem. Businesses that embed these principles into their AI governance frameworks will be better equipped to navigate future regulatory landscapes and earn the trust of their stakeholders, from customers to investors.

Framework 3: Sector-Specific Guidelines and Best Practices

Beyond broad national and international frameworks, US businesses must also pay close attention to sector-specific guidelines and best practices. Many industries, recognizing the unique ethical challenges posed by AI within their domains, are developing their own sets of standards and recommendations. These specialized guidelines often provide granular detail and practical advice tailored to the specific risks and opportunities of a given sector.

For example, the healthcare industry faces distinct ethical considerations related to patient data privacy, diagnostic accuracy, and algorithmic bias in clinical decision-making. Similarly, the financial services sector must contend with issues of fair lending, fraud detection, and consumer protection when deploying AI. Understanding and implementing these sector-specific frameworks is crucial for targeted compliance and responsible innovation.

Examples of Sector-Specific AI Ethics

Different industries have developed or are developing guidelines to address their unique AI challenges:

- Healthcare: The FDA provides guidance on AI/ML-based medical devices, focusing on safety, effectiveness, and transparency in clinical applications.

- Financial Services: Regulators like the Consumer Financial Protection Bureau (CFPB) are scrutinizing AI models for bias in lending, credit scoring, and insurance, pushing for explainability and fairness.

- HR and Employment: New York City’s Local Law 144 on Automated Employment Decision Tools (AEDT) mandates bias audits for AI used in hiring and promotion, setting a precedent for other jurisdictions.

- Automotive: The development of autonomous vehicles brings ethical questions around liability, safety, and decision-making in critical situations, leading to industry-specific testing and certification standards.

Businesses operating in regulated sectors cannot rely solely on general AI ethics frameworks. They must actively engage with their industry associations, regulatory bodies, and legal counsel to stay abreast of the latest sector-specific mandates and best practices. This layered approach to AI ethics ensures comprehensive coverage and robust compliance.

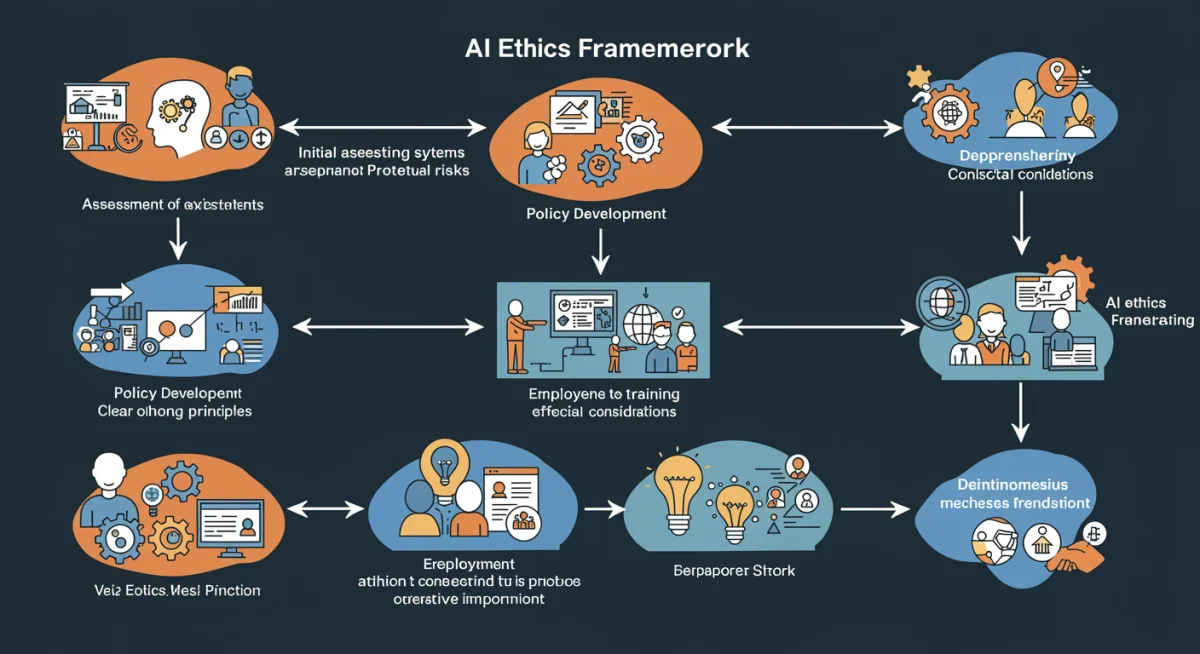

Framework 4: Internal Governance and Accountability Structures

While external frameworks provide essential guidance, the most effective AI ethics strategy originates from within an organization. Establishing robust internal governance and accountability structures is paramount for operationalizing ethical AI principles. This involves creating dedicated roles, policies, and processes that embed ethical considerations into every stage of AI development and deployment.

An internal governance framework ensures that ethical decision-making is not left to individual discretion but is systematically integrated into the company’s culture and operations. It provides clear lines of responsibility, mechanisms for oversight, and channels for addressing ethical concerns, fostering a culture of responsible innovation throughout the organization.

Key Elements of Internal AI Governance

Effective internal governance involves several critical components:

- AI Ethics Committee: A cross-functional team responsible for developing, reviewing, and enforcing internal AI ethics policies and guidelines. This committee should include diverse perspectives from legal, technical, ethical, and business departments.

- Ethical AI by Design Principles: Integrating ethical considerations from the initial design phase of AI systems, rather than attempting to retrofit them later. This includes privacy-by-design and fairness-by-design approaches.

- Regular Audits and Assessments: Conducting periodic internal and external audits of AI systems to identify and mitigate biases, ensure transparency, and verify compliance with internal policies and external regulations.

- Employee Training and Awareness: Providing comprehensive training to all employees involved in AI development, deployment, or decision-making on ethical AI principles, company policies, and relevant regulations.

- Whistleblower Protection and Grievance Mechanisms: Establishing clear and safe channels for employees and external stakeholders to report ethical concerns or potential harms caused by AI systems, without fear of retaliation.

Implementing strong internal governance not only ensures compliance but also enhances a company’s reputation, attracts top talent, and builds long-term trust with customers and partners. It transforms ethical AI from a theoretical concept into a practical, actionable business strategy.

Framework 5: Data Governance and Privacy by Design

At the heart of ethical AI lies responsible data governance and the principle of privacy by design. AI systems are inherently data-driven, and the quality, provenance, and ethical handling of data directly impact the fairness, accuracy, and trustworthiness of AI outputs. Businesses must implement stringent data governance policies to ensure data is collected, stored, processed, and used in an ethical and compliant manner.

Privacy by design, a concept that originated in privacy engineering, extends this further by mandating that privacy considerations are embedded into the very architecture of AI systems and data processing activities from the earliest stages. This proactive approach helps prevent privacy breaches and ensures that individual rights are protected throughout the AI lifecycle.

Pillars of Data Governance for Ethical AI

Effective data governance is multi-faceted and requires a concerted effort across an organization:

- Data Minimization: Collecting only the data necessary for a specific purpose, reducing the risk of misuse or breach.

- Data Anonymization and Pseudonymization: Employing techniques to protect individual identities when using data for AI training or analysis, especially for sensitive information.

- Consent Management: Establishing clear and transparent processes for obtaining and managing user consent for data collection and usage, aligning with regulations like CCPA.

- Data Provenance and Quality: Ensuring that data sources are reliable, representative, and free from biases that could lead to discriminatory AI outcomes.

- Data Security: Implementing robust cybersecurity measures to protect AI training data and deployed models from unauthorized access, alteration, or destruction.

Beyond these technical measures, fostering a culture of data ethics within the organization is critical. This involves regular training for data scientists, engineers, and legal teams on responsible data handling, privacy regulations, and the ethical implications of their work. By prioritizing data governance and privacy by design, US businesses can build AI systems that are not only powerful but also trustworthy and respectful of individual rights.

The Path Forward: Integrating and Adapting AI Ethics

Implementing these five AI ethics frameworks is not a one-time task but an ongoing commitment. The landscape of AI technology and regulation is constantly evolving, requiring businesses to remain agile and adaptable. A holistic approach that integrates these frameworks into a cohesive strategy will be the most effective way for US businesses to navigate the complexities of AI by 2025 and beyond.

This integration involves creating a feedback loop where insights from internal audits, regulatory updates, and stakeholder feedback continuously inform and refine the organization’s AI ethics policies and practices. It also means fostering a culture where ethical considerations are a core part of every decision related to AI, from initial concept to final deployment and maintenance.

Key Steps for Continuous Adaptation

To ensure long-term success in AI ethics, businesses should consider:

- Cross-Functional Collaboration: Breaking down silos between legal, technical, business, and ethics teams to ensure a unified approach to AI governance.

- Continuous Monitoring and Evaluation: Regularly assessing the performance and impact of AI systems for fairness, transparency, and accuracy, and addressing any emerging issues promptly.

- Engagement with Stakeholders: Actively seeking input from employees, customers, and civil society organizations on AI ethics concerns and incorporating their perspectives into policy development.

- Investment in Ethical AI Tools: Utilizing tools and technologies designed to detect bias, enhance explainability, and ensure the security of AI systems.

- Advocacy and Policy Shaping: Participating in industry forums and engaging with policymakers to help shape future AI regulations in a way that promotes responsible innovation.

By proactively embracing these frameworks and committing to continuous adaptation, US businesses can not only avoid penalties but also unlock the full potential of AI as a force for good. Responsible AI is not just about compliance; it’s about building a better, more equitable, and sustainable future.

| Key Framework | Brief Description |

|---|---|

| NIST AI RMF | Voluntary guidelines for managing AI risks across its lifecycle (Govern, Map, Measure, Manage). |

| OECD AI Principles | International ethical guidelines emphasizing human-centered values, transparency, and accountability. |

| Sector-Specific Guidelines | Tailored ethical and compliance standards for industries like healthcare, finance, and HR. |

| Internal Governance | Company-specific policies, committees, and training to embed AI ethics internally. |

Frequently Asked Questions About AI Ethics Frameworks

It’s urgent because evolving regulatory landscapes, increased public scrutiny, and the potential for significant penalties demand proactive measures. Implementing frameworks by 2025 helps businesses ensure compliance, build trust, and avoid legal and reputational damage in a rapidly changing AI environment.

The NIST AI RMF is a voluntary framework providing comprehensive guidelines for managing AI risks across its lifecycle. It’s important because it serves as a leading benchmark for best practices, guiding organizations to identify, measure, and mitigate AI-related harms effectively, thereby influencing future regulations.

Sector-specific guidelines offer tailored ethical and compliance standards unique to particular industries, such as healthcare or finance. They delve into granular details and specific risks relevant to that sector, complementing broader frameworks like NIST or OECD by providing specialized practical advice.

Internal governance is crucial for operationalizing AI ethics within an organization. It involves establishing dedicated committees, policies, and training programs to embed ethical considerations into every stage of AI development and deployment, ensuring accountability and fostering a culture of responsible innovation.

Data governance and privacy by design are fundamental because AI systems are data-driven. They ensure data is collected, processed, and used ethically, minimizing data, obtaining consent, and protecting privacy from the outset. This prevents biases, enhances transparency, and builds trust in AI systems.

Conclusion

The landscape of artificial intelligence is rapidly evolving, bringing with it both immense potential and significant ethical challenges. For US businesses, the period leading up to 2025 marks a critical juncture, demanding the proactive implementation of robust AI ethics frameworks. By embracing guidelines such as the NIST AI RMF, the OECD AI principles, sector-specific mandates, strong internal governance, and rigorous data privacy by design, organizations can navigate this complex environment successfully. Beyond mere compliance, embedding ethical AI practices fosters trust, enhances brand reputation, and positions businesses as leaders in responsible innovation, ensuring a sustainable and equitable future for AI deployment.